I was forced to use a 3750G as a router yesterday for a WAN link that was only 70Mb. The LAN interfaces were all gig. The customer wanted to ensure that 30% of the bandwidth was available for EF marked packets. Everything else was to get 70%

A lot of people have trouble with QoS on the 3750. This is mainly due to the tiny buffers, complexity, and the defaults it uses.

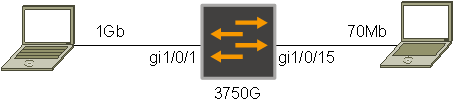

Let’s use the following network for this post:

The laptop on the left is connected on a gig port running iperf on linux. The laptop on the right is connected to a hard-coded 100Mb port. However the link itself needs to act like a 70Mb port as the carrier is policing it to 70Mb.

Before we turn any QoS on, let’s get a benchmark. I’m going to send 5 session from the iperf server with DSCP 0 and 5 session with DSCP EF:

Server

$ iperf -c 37.46.204.2 -w 128k -t 600 -i 5 --tos 0 -P 5

$ iperf -c 37.46.204.2 -w 128k -t 600 -i 5 --tos 184 -P 5

Both outputs show bandwidth used is 50/50:

DSCP 0

[SUM] 0.0- 5.0 sec 28.9 MBytes 48.5 Mbits/sec

DSCP EF

[SUM] 5.0-10.0 sec 29.1 MBytes 48.8 Mbits/sec

Also to note is the output drops on gi1/0/15. Remember we are going from a gig interface to a 100Mb interface. This is after 30 seconds:

QOS_TEST#sh int gi1/0/15 | include drops

Input queue: 0/75/0/0 (size/max/drops/flushes); Total output drops: 2145

MLS QoS on

I’ll now simply turn QoS on and nothing else:

QOS_TEST(config)#mls qos

QOS_TEST(config)#end

Running the same iperf commands above I see this:

DSCP 0 retest

[SUM] 0.0- 5.0 sec 55.6 MBytes 93.3 Mbits/sec

DSCP EF retest

[SUM] 5.0-10.0 sec 2.55 MBytes 4.27 Mbits/sec

Voice packets are only getting 4% of the interface speed. Why is this? This is a default on the 3750 and you’ll need to do a little digging. First we need to see which queue EF packets will get into:

QOS_TEST#sh mls qos maps dscp-output-q

Dscp-outputq-threshold map:

d1 :d2 0 1 2 3 4 5 6 7 8 9

------------------------------------------------------------

0 : 02-01 02-01 02-01 02-01 02-01 02-01 02-01 02-01 02-01 02-01

1 : 02-01 02-01 02-01 02-01 02-01 02-01 03-01 03-01 03-01 03-01

2 : 03-01 03-01 03-01 03-01 03-01 03-01 03-01 03-01 03-01 03-01

3 : 03-01 03-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01

4 : 01-01 01-01 01-01 01-01 01-01 01-01 01-01 01-01 04-01 04-01

5 : 04-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01 04-01

6 : 04-01 04-01 04-01 04-01

It’s a bit cryptic, but we can see that DSCP value 46 will map to queue 1, while DSCP 0 maps to queue 2. Let’s now check the default queueing structure on our interface:

QOS_TEST#sh mls qos interface gi1/0/15 queueing

GigabitEthernet1/0/15

Egress Priority Queue : disabled

Shaped queue weights (absolute) : 25 0 0 0

Shared queue weights : 25 25 25 25

The port bandwidth limit : 100 (Operational Bandwidth:100.0)

The port is mapped to qset : 1

Shaped queue weights shows 25 0 0 0. This actually means that queue 1 is used 1/25 of the interface speed. 100/25 = 4. This is why we are seeing 4Mb for EF traffic.

Ater 30 seconds I took a new reading of the drops and we see this:

QOS_TEST#sh int gi1/0/15 | include drops

Input queue: 0/75/0/0 (size/max/drops/flushes); Total output drops: 8022

A lot worse.

The fix

The first thing we need to do is remove the shaping off the interface:

QOS_TEST(config-if)#srr-queue bandwidth shape 0 0 0 0

Now I want to give 30% to EF and 70% to BE. I don’t want these to be hard-policed so I use the share command:

srr-queue bandwidth share 30 70 1 1

The share command allows other queues to use the bandwidth if those queues are not full. These numbers are not 1/x like the shape command. Rather IOS will add all the values up (102 in our case) and then give 102/30′s worth of bandwidth to queue 1.

This is all great for 100Mb, but remember our link is getting policed to 70Mb. So we need to add this:

srr-queue bandwidth limit 70

Let’s verify:

QOS_TEST#sh mls qos interface gi1/0/15 queueing

GigabitEthernet1/0/15

Egress Priority Queue : disabled

Shaped queue weights (absolute) : 0 0 0 0

Shared queue weights : 30 70 1 1

The port bandwidth limit : 70 (Operational Bandwidth:70.38)

The port is mapped to qset : 1

DSCP 0 final

[SUM] 10.0-15.0 sec 29.0 MBytes 48.7 Mbits/sec

DSCP EF final

[SUM] 10.0-15.0 sec 11.2 MBytes 18.9 Mbits/sec

Note too that if I just send EF or BE packets, each can use up to 70Mb. It’s only if both are sending for a total over 70Mb do they get their shares as above.

One issue that still remains is that I’m getting these drops after 30 seconds:

QOS_TEST#sh int gi1/0/15 | include drops

Input queue: 0/75/0/0 (size/max/drops/flushes); Total output drops: 5212

In order to properly tune buffers I thoroughly recommend a read through this document: https://supportforums.cisco.com/docs/DOC-8093

Of course in the real world you should be calculating what the maximum amount of voice traffic you are going to send. You would never have ‘more’ voice traffic than if every person in your company was on an external call.

If I change the above test so that the server is sending 15Mb of UDP traffic marked DSCP EF, then I can see that the TCP BE traffic drops while no drops are on the EF queue:

iperf -c 37.46.204.2 -u -b 15m -p 5002 -t 5

No packets dropped in the EF stream:

[ 3] 0.0- 5.0 sec 7.76 MBytes 13.0 Mbits/sec 1.776 ms 0/ 5532 (0%)

Checking the port drop statistics on the 3750G:

QOS_TEST#sh platform port-asic stats drop gi1/0/15

Interface Gi1/0/15 TxQueue Drop Statistics

Queue 0

Weight 0 Frames 0

Weight 1 Frames 0

Weight 2 Frames 0

Queue 1

Weight 0 Frames 276

Weight 1 Frames 0

Weight 2 Frames 0

No voice packets dropped there either.